Journal article

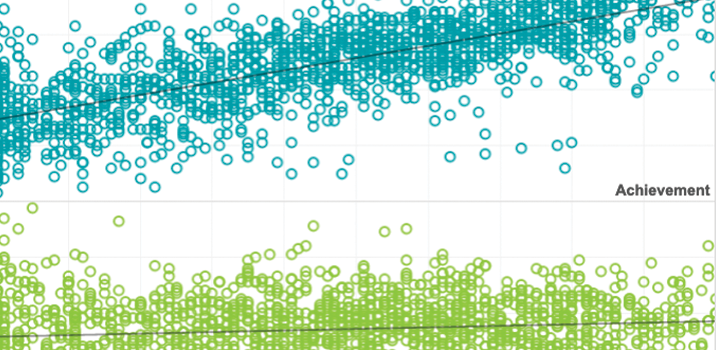

The phantom collapse of student achievement in New York

2014

By: John Cronin, Nate Jensen

Abstract

When New York state released the first results of the exams under the Common Core State Standards, many wrongly believed that the results showed dramatic declines in student achievement. A closer look at the results showed that student achievement may have increased. Another lesson from the exams is that states need to closely coordinate new data with existing data when they switch to different measuring instruments.

See MoreThis article was published outside of NWEA. The full text can be found at the link above.

Topics: Measurement & scaling

Related Topics

Predicting Amira Reading Mastery Based on NWEA MAP Reading Fluency Benchmark Assessment Scores

This document presents results from a linking study conducted by NWEA in May 2024 to statistically connect the grades 1–5 English Amira Reading Mastery (ARM) scores with the Scaled-Words-Correct-Per-Minute (SWCPM) scores from the MAP Reading Fluency benchmark assessment taken during Fall and Winter 2023–2024.

By: Fang Peng, Ann Hu, Christopher Wells

Products: MAP Reading Fluency

Topics: Computer adaptive testing, Early learning, Measurement & scaling, Reading & language arts

Achievement and Growth Norms for Course-Specific MAP Growth Tests

This report documents the procedure used to produce the achievement and growth user norms for a series of the course-specific MAP® Growth™ subject tests, including Algebra 1, Geometry, Algebra 2, Integrated Math I, Integrated Math II, Integrated Math III, and Biology/Life Science. Among these tests, Integrated Math I, Integrated Math II, Integrated Math III, and Biology/Life Science were the first time to have their norms available. The remaining tests, i.e., Algebra 1, Geometry, and Algebra 2, had their norms updated including receiving more between-term growth norms by using more recent test events. Procedure for norm sample selection and a model-based approach using the multivariate true score model (Thum & He, 2019) that factors out known imprecision of scores to generate the norms are also provided in detail, along with the snapshots of the achievement and growth norms for each test.

By: Wei He

Products: MAP Growth

Topics: Measurement & scaling

Longitudinal models of reading and mathematics achievement in deaf and hard of hearing students

New research using longitudinal data provides evidence that deaf and hard of hearing (DHH) students continue to build skills in math and reading throughout grades 2 to 8, challenging assumptions that DHH students’ skills plataeu in elementary grades.

By: Stephanie Cawthon, Johny Daniel, North Cooc, Ana Vielma

Topics: Equity, Measurement & scaling

Achievement and growth norms for English MAP Reading Fluency Foundational Skills

This report documents the norming study procedure used to produce the achievement and growth user norms for English MAP Reading Fluency Foundational Skills.

By: Wei He

Products: MAP Reading Fluency

Topics: Measurement & scaling

The MAP Growth theory of action describes key features of MAP Growth and its position in a comprehensive assessment system.

By: Patrick Meyer, Michael Dahlin

Products: MAP Growth

Topics: Equity, Measurement & scaling, Test design

Bayesian uncertainty estimation for Gaussian graphical models and centrality indices

This study compares estimation of symptom networks with Bayesian GLASSO- and Horseshoe priors to estimation using the frequentist GLASSO using extensive simulations.

By: Joran Jongerling, Sacha Epskamp, Donald Williams

Topics: Measurement & scaling

School officials regularly use school-aggregate test scores to monitor school performance and make policy decisions. In this report, RAND researchers investigate one specific issue that may contaminate utilization of COVID-19–era school-aggregate scores and result in faulty comparisons with historical and other proximal aggregate scores: changes in school composition over time. To investigate this issue, they examine data from NWEA’s MAP Growth assessments, interim assessments used by states and districts during the 2020–2021 school year.

By: Jonathan Schweig, Megan Kuhfeld, Andrew McEachin, Melissa Diliberti, Louis Mariano

Topics: Measurement & scaling, COVID-19 & schools