Journal article

Validation of longitudinal achievement constructs of vertically scaled computerised adaptive tests: a multiple-indicator, latent-growth modelling approach

2013

International Journal of Quantative Research in Education, 1(4), 383–407

Abstract

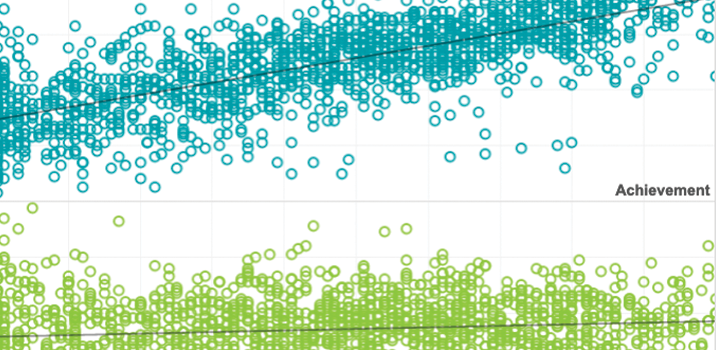

It is a commonly accepted assumption by educational researchers and practitioners that an underlying longitudinal achievement construct exists across grades in K–12 achievement tests. This assumption provides the necessary assurance to measure and interpret student growth over time. However, evidence is needed to determine whether the achievement construct remains consistent or shifts over grades or time. The current investigative study uses a multiple-indicator, latent-growth modelling (MLGM) approach to examine the longitudinal achievement construct and its invariance for MAP Growth.

See MoreThis article was published outside of NWEA. The full text can be found at the link above.

Topics: Measurement & scaling, Growth modeling