Working paper

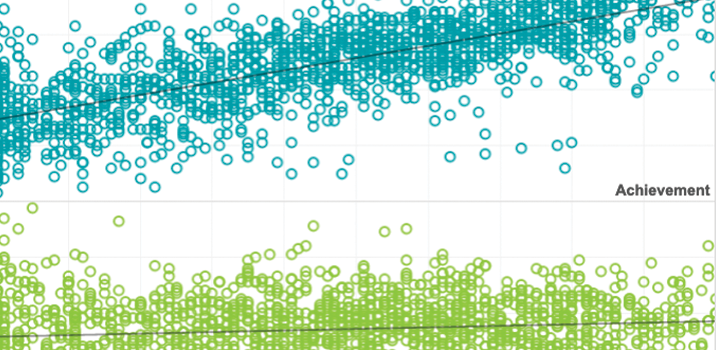

Modeling student test-taking motivation in the context of an adaptive achievement test

2015

Description

This study examined the utility of response time-based analyses in understanding the behavior of unmotivated test takers. For an adaptive achievement test, patterns of observed rapid-guessing behavior and item response accuracy were compared to the behavior expected under several types of models that have been proposed to represent unmotivated test taking behavior. Test taker behavior was found to be inconsistent with these models, with the exception of the effort moderated model (S.L. Wise & DeMars, 2006). Effort-moderated scoring was found to both yield scores that were more accurate than those found under traditional scoring, and exhibit improved person fit statistics. In addition, an effort-guided adaptive test was proposed and shown to alleviate item difficulty mis-targeting caused by unmotivated test taking

See More