School & test engagement

Educators need accurate assessment data to help students learn. But when students rapid-guess or otherwise disengage on tests the validity of scores can be affected. Our research examines the causes of test disengagement, how it relates to students’ overall academic engagement, and its impacts on individual test scores. We look at its effects on aggregated metrics used for school and teacher evaluations, achievement gap studies, and more. This research also explores better ways to measure and improve engagement and to help ensure that test scores more accurately reflect what students know and can do.

This study uses an analytic example to explore whether metadata might help illuminate such constructs. Specifically, analyses examine whether the amount of time students spend on test items (after accounting for item difficulty and estimates of true achievement), and difficult items in particular, tell us anything about the student’s academic motivation and self‐efficacy.

By: James Soland

Topics: School & test engagement, Math & STEM, Social-emotional learning

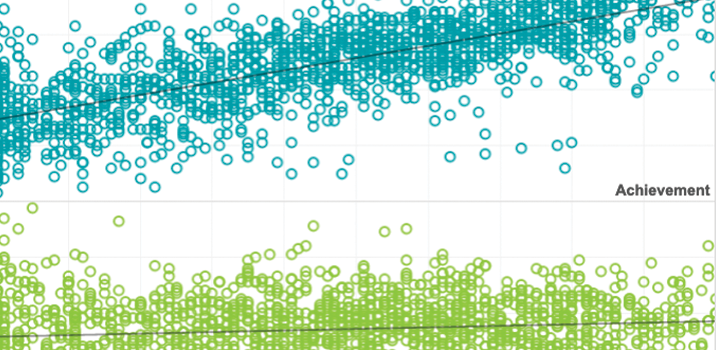

In this study, we examine the impact of two techniques to account for test disengagement—(a) removing unengaged test takers from the sample and (b) adjusting test scores to remove rapidly guessed items—on estimates of school contributions to student growth, achievement gaps, and summer learning loss.

By: Megan Kuhfeld, James Soland

Topics: Measurement & scaling, School & test engagement, Student growth & accountability policies

The emerging science of test-taking disengagement

Student performance on standardized tests reflects more than just mastery of the material.

By: Steven Wise

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement

An information-based approach to identifying rapid-guessing thresholds

Although several common threshold methods are based on rapid guessing response accuracy or visual inspection of response time distributions, this paper describes a new information-based approach to setting thresholds that does not share the limitations of other methods.

By: Steven Wise

The (non)impact of differential test taker engagement on aggregated scores

Disengaged test taking tends to be most prevalent with low-stakes tests. This has led to questions about the validity of aggregated scores from large-scale international assessments such as PISA and TIMSS, as previous research has found a meaningful correlation between the mean engagement and mean performance of countries.

By: Steven Wise, James Soland, Yuanchao Bo

Can test metadata help schools measure social-emotional learning?

Social-emotional learning (SEL) competencies like self-efficacy and conscientiousness can be predictive of long-term academic achievement. But they can also be difficult to measure. In a new study led by NWEA’s James Soland, researchers investigated whether assessment metadata – the way students approach tests and surveys – can provide useful SEL data to schools and educators. Soland joins CPRE research specialist Tesla DuBois to discuss his findings, their implications, and the promise and limitations of student metadata in general.

Consortium for Policy Research in Education Knowledge Hub podcast

Mentions: James Soland

Topics: School & test engagement, Innovations in reporting & assessment, Social-emotional learning

Computer-based testing offers glimpse into ‘rapid guessing’ habits

When students speed through a computer-based test, their responses are far less likely to be accurate than if they took longer to find the solution, according to new research.

Education Dive

Mentions: Steven Wise

Topics: Equity, School & test engagement