Journal article

“No fun games”: Engagement effects of two gameful assessment prototypes

2018

Journal of Research on Technology in Education, 50(2) 134-148.

Abstract

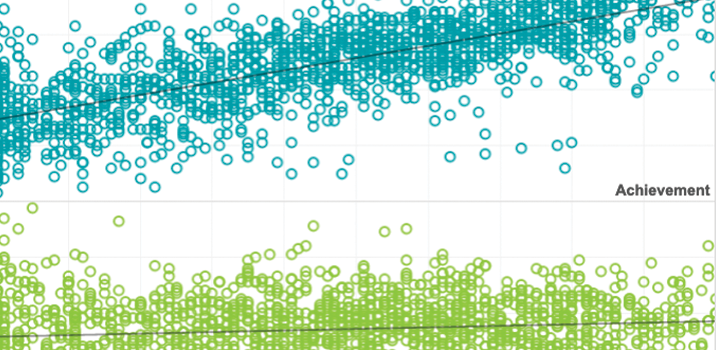

Assessments with features of games propose to address student motivation deficits common in traditional assessments. This study examines the impact of two “gameful assessment” prototypes on student engagement and teacher perceptions among 391 Grades 3–7 students and 14 teachers in one Midwestern and one Northwestern school. Using mixed methods, it finds higher satisfaction for students taking gameful assessments, and conflicting attitudes from teachers regarding the impact of gameful assessments on students’ intrinsic motivation and desire to learn. The article concludes by discussing opportunities for continued iteration and innovation in gameful assessment design.

See MoreThis article was published outside of NWEA. The full text can be found at the link above.