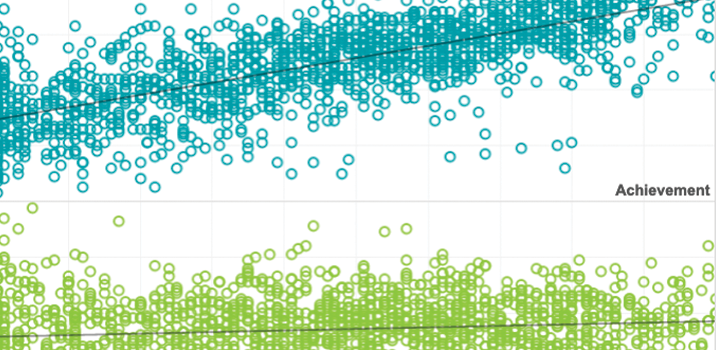

School & test engagement

Educators need accurate assessment data to help students learn. But when students rapid-guess or otherwise disengage on tests the validity of scores can be affected. Our research examines the causes of test disengagement, how it relates to students’ overall academic engagement, and its impacts on individual test scores. We look at its effects on aggregated metrics used for school and teacher evaluations, achievement gap studies, and more. This research also explores better ways to measure and improve engagement and to help ensure that test scores more accurately reflect what students know and can do.

Parameter estimation accuracy of the effort-moderated IRT model under multiple assumption violations

This session from the National Council on Measurement in Education 2020 virtual conference presents new research findings on understanding and managing test-taking disengagement.

By: James Soland, Joseph Rios

Using retest data to evaluate and improve effort-moderated scoring

This session from the National Council on Measurement in Education 2020 virtual conference presents new research findings on understanding and managing test-taking disengagement (presentation begins at 22:55).

By: Steven Wise, Megan Kuhfeld

This session from the 2020 National Council on Measurement in Education virtual conference presents new research findings on understanding and managing test-taking disengagement. (Presentation begins at 22:55).

By: James Soland, Steven Wise

Topics: School & test engagement

Using retest data to evaluate and improve effort-moderated scoring

This study investigated effort‐moderated (E‐M) scoring, in which item responses classified as rapid guesses are identified and excluded from scoring, and its affect on score distortion from disengaged test taking.

By: Steven Wise, Megan Kuhfeld

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement

An intelligent CAT that can deal with disengaged test taking

This book presents varied applications of artificial intelligence (AI) in test development, including research and successful examples of using AI technology in automated item generation, automated test assembly, automated scoring, and computerized adaptive testing.

By: Steven Wise

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement

A cessation of measurement: Identifying test taker disengagement using response time

This chapter explores both what happens when test takers disengage and how this disengagement should be managed during scoring.

By: Steven Wise, Megan Kuhfeld

Topics: School & test engagement

Looking back: how prior-year attendance impacts starting achievement

This research uses interim assessment test results to measure the impact of prior year attendance on starting achievement the following year. Results show the impacts are significant and persistent.

By: Shannon Bi, Emily Wolk

Topics: School & test engagement, Student growth & accountability policies