School & test engagement

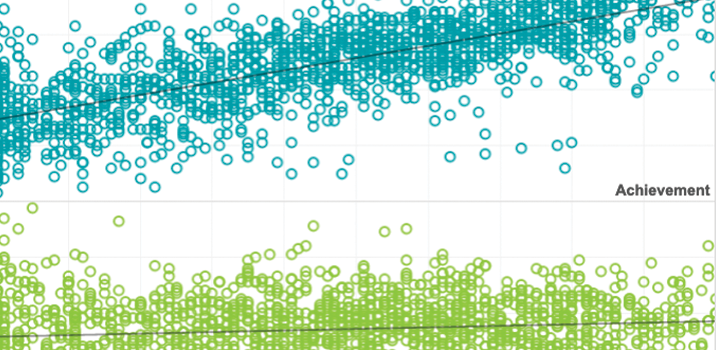

Educators need accurate assessment data to help students learn. But when students rapid-guess or otherwise disengage on tests the validity of scores can be affected. Our research examines the causes of test disengagement, how it relates to students’ overall academic engagement, and its impacts on individual test scores. We look at its effects on aggregated metrics used for school and teacher evaluations, achievement gap studies, and more. This research also explores better ways to measure and improve engagement and to help ensure that test scores more accurately reflect what students know and can do.

An investigation of examinee test-taking effort on a large-scale assessment

Most previous research involving the study of response times has been conducted using locally developed instruments. The purpose of the current study was to examine the amount of rapid-guessing behavior within a commercially available, low-stakes instrument.

By: Steven Wise, J. Carl Setzer, Jill R. van den Heuvel, Guangming Ling

Topics: Measurement & scaling, School & test engagement, Student growth & accountability policies

The utility of adaptive testing in addressing the problem of unmotivated examinees

This integrative review examines the motivational benefits of computerized adaptive tests (CATs), and demonstrates that they can have important advantages over conventional tests in both identifying instances when examinees are exhibiting low effort, and effectively addressing the validity threat posed by unmotivated examinees.

By: Steven Wise

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement

Effort analysis: Individual score validation of achievement test data

Whenever the purpose of measurement is to inform an inference about a student’s achievement level, it is important that we be able to trust that the student’s test score accurately reflects what that student knows and can do. Such trust requires the assumption that a student’s test event is not unduly influenced by construct-irrelevant factors that could distort his score. This article examines one such factor—test-taking motivation—that tends to induce a person-specific, systematic negative bias on test scores.

By: Steven Wise

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement

Response time as an indicator of test taker speed: assumptions meet reality

The growing presence of computer-based testing has brought with it the capability to routinely capture the time that test takers spend on individual test items. This, in turn, has led to an increased interest in potential applications of response time in measuring intellectual ability and achievement. Goldhammer (this issue) provides a very useful overview of much of the research in this area, and he provides a thoughtful analysis of the speed-ability trade-off and its impact on measurement.

By: Steven Wise

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement

Modeling student test-taking motivation in the context of an adaptive achievement test

This study examined the utility of response time-based analyses in understanding the behavior of unmotivated test takers. For an adaptive achievement test, patterns of observed rapid-guessing behavior and item response accuracy were compared to the behavior expected under several types of models that have been proposed to represent unmotivated test taking behavior.

Topics: Measurement & scaling, Growth modeling, School & test engagement

Modeling student test-taking motivation in the context of an adaptive achievement test

This study examined the utility of response time‐based analyses in understanding the behavior of unmotivated test takers. For the data from an adaptive achievement test, patterns of observed rapid‐guessing behavior and item response accuracy were compared to the behavior expected under several types of models that have been proposed to represent unmotivated test taking behavior.

Topics: Innovations in reporting & assessment, Measurement & scaling, School & test engagement

Rapid‐guessing behavior: Its identification, interpretation, and implications

The rise of computer‐based testing has brought with it the capability to measure more aspects of a test event than simply the answers selected or constructed by the test taker. One behavior that has drawn much research interest is the time test takers spend responding to individual multiple‐choice items.

By: Steven Wise

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement