Innovations in reporting & assessment

Emerging technologies allow for a variety of methods to assess students and report data specific to the needs of different stakeholders. These various approaches can result in assessments that are more engaging for students, along with reporting that provides more insightful, useful information for students, families, and educators.

This manuscript reports results from two studies conducted during the development of KinderTEK, an iPad delivered kindergarten mathematics intervention, to determine the relationship between instructor-reported technology experience and intervention implementation, as measured by student use.

By: Lina Shanley, Mari Strand Cary, Ben Clarke, Meg Guerreiro, Michael Thier

A general approach to measuring test-taking effort on computer-based tests

The current study outlines a general process for measuring item-level effort that can be applied to an expanded set of item types and test-taking behaviors (such as omitted or constructed responses). This process, which is illustrated with data from a large-scale assessment program, should improve our ability to detect non-effortful test taking and perform individual score validation.

By: Steven Wise, Lingyun Gao

Topics: Measurement & scaling, Innovations in reporting & assessment, Student growth & accountability policies

Rapid‐guessing behavior: Its identification, interpretation, and implications

The rise of computer‐based testing has brought with it the capability to measure more aspects of a test event than simply the answers selected or constructed by the test taker. One behavior that has drawn much research interest is the time test takers spend responding to individual multiple‐choice items.

By: Steven Wise

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement

Are all biases bad? Collaborative grounded theory in developmental evaluation of education policy

By: Ross Anderson, Meg Guerreiro, Jo Smith

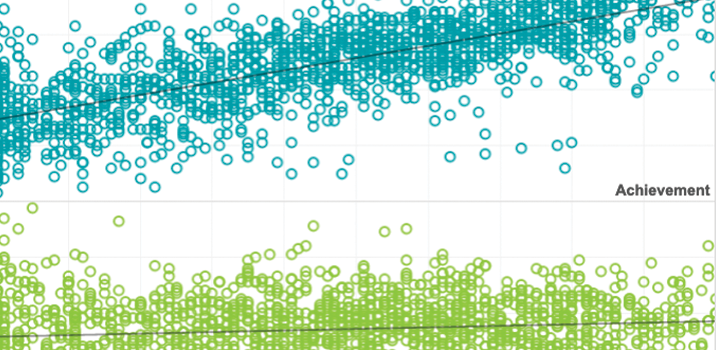

Modeling student test-taking motivation in the context of an adaptive achievement test

This study examined the utility of response time‐based analyses in understanding the behavior of unmotivated test takers. For the data from an adaptive achievement test, patterns of observed rapid‐guessing behavior and item response accuracy were compared to the behavior expected under several types of models that have been proposed to represent unmotivated test taking behavior.

Topics: Innovations in reporting & assessment, Measurement & scaling, School & test engagement

Response time as an indicator of test taker speed: assumptions meet reality

The growing presence of computer-based testing has brought with it the capability to routinely capture the time that test takers spend on individual test items. This, in turn, has led to an increased interest in potential applications of response time in measuring intellectual ability and achievement. Goldhammer (this issue) provides a very useful overview of much of the research in this area, and he provides a thoughtful analysis of the speed-ability trade-off and its impact on measurement.

By: Steven Wise

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement

Effort analysis: Individual score validation of achievement test data

Whenever the purpose of measurement is to inform an inference about a student’s achievement level, it is important that we be able to trust that the student’s test score accurately reflects what that student knows and can do. Such trust requires the assumption that a student’s test event is not unduly influenced by construct-irrelevant factors that could distort his score. This article examines one such factor—test-taking motivation—that tends to induce a person-specific, systematic negative bias on test scores.

By: Steven Wise

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement