Working paper

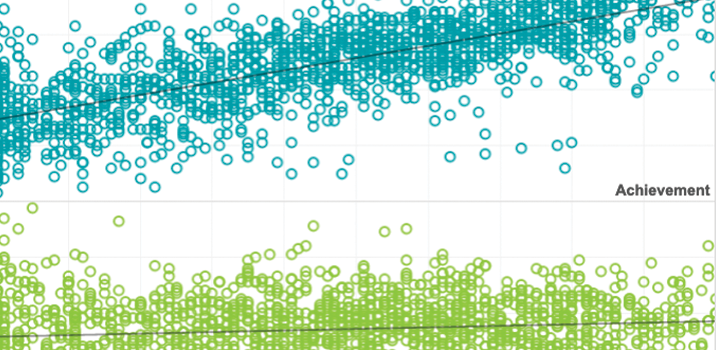

Using assessment metadata to quantify the impact of test disengagement on estimates of educational effectiveness

2019

Description

Educational stakeholders have long known that students might not be fully engaged when taking an achievement test, and that such disengagement could undermine the inferences drawn from observed scores. Thanks to the growing prevalence of computer-based tests and the new forms of metadata they produce, researchers have developed and validated procedures for using item response times—the seconds that elapse between when an item is presented and answered—to identify responses to items that are likely disengaged. In this study, we introduce those disengagement metrics for a policy and evaluation audience, including how disengagement might bias estimates of educational effectiveness. Analytically, we use data from a state administering a computer-based test to examine the effect of test disengagement on estimates of school contributions to student growth, achievement gaps, and summer learning loss. In so doing, we broaden the literature investigating how test disengagement can influence uses of aggregated test scores, provide guidance for policymakers and evaluators on how to account for disengagement in their own work, and consider the promise and limitations of using achievement test metadata for related purposes.

See More